Getting AI to Be Your Beta Reviewer

So I had this idea a few weeks ago: what if AI could review my book (at any point in the writing process) and provide me a simple AI beta review with suggestions for improvement?

My initial foray into what would become a venture into a two-week programming rabbit hole started with a prompt given to ChatGPT. (If you’re not sure what that is, please see this article.)

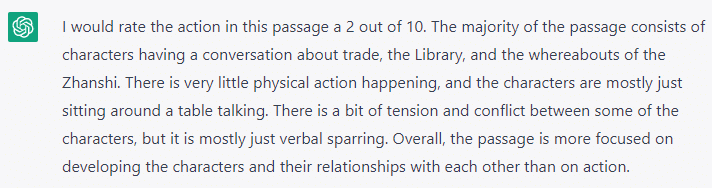

describe the action in this passage on a scale of 1 to 10, with 10 being high action and 1 being boring (and then my passage)

Of course I didn’t expect a glowing review. I had just finished writing this first draft, but it did give me some things to think about.

After making changes to the passage, I tried again (on the same chat thread).

Eventually, I got the action to 7 out of 10, which I would later learn meant “Very high action, incredibly exciting” to this faceless robot.

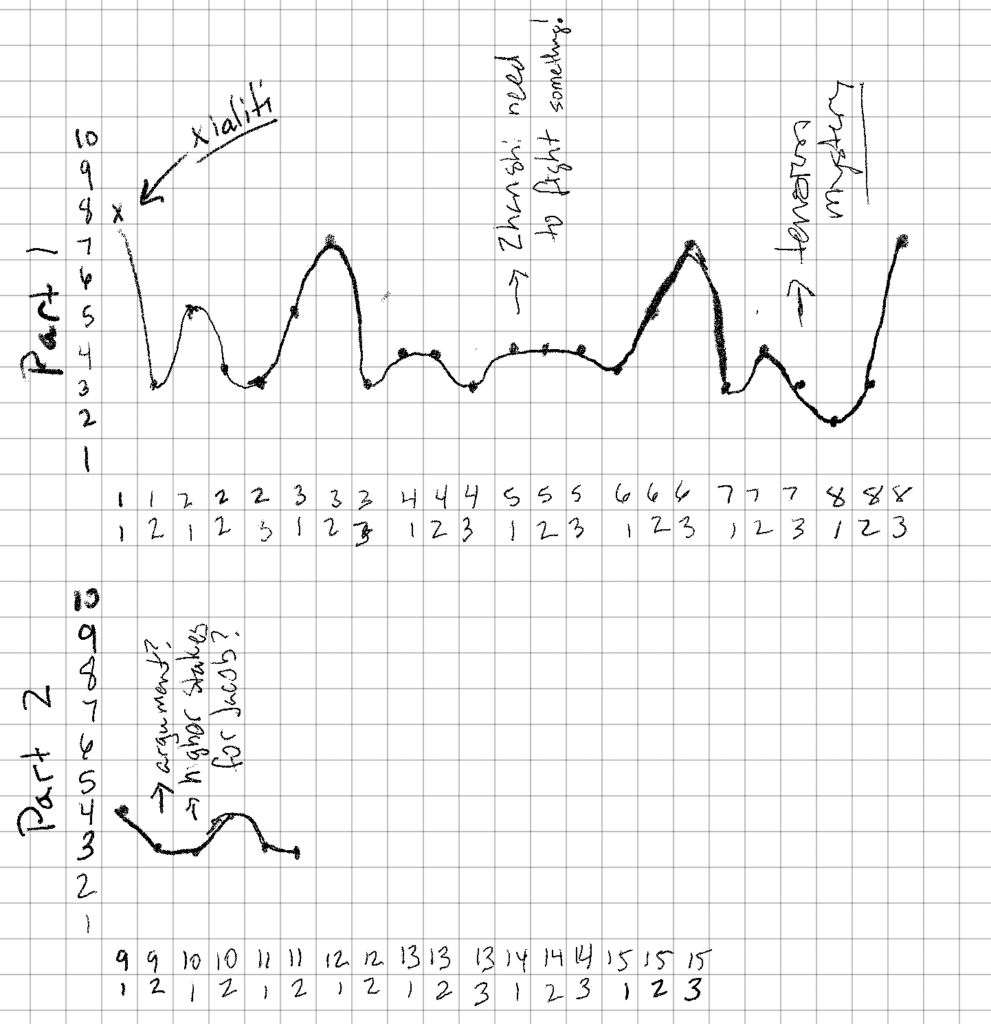

I did this for everything I’d written so far (maybe 1/3rd of the book) and graphed it out. It look a while to cut and paste with the same prompt, but in the end I had something I thought I could work with.

I added a few more elements (like characterization, dialogue, pacing, etc.) and tried again with several more passages. There are limits to the amount of input you can give ChatGPT, so it took a lot of time. I didn’t graph those, but all of this cutting and pasting gave me an idea.

What if I could get ChatGPT to do this same thing for the entire book? What about other books that I know need revision? Would it help or hinder me?

Could I create an AI beta reader?

Well, aside from the fact that programming and debugging takes time and therefore I haven’t written much in two weeks, I can say that I think I found a way to tie Excel, ChatGPT, Scrivener, and Word together to give me an analysis of any book (in small chunks) that I can then print out and use as a guide to examining my first draft.

Here’s a snippet of one section of a chapter in my work in progress after my first attempt at an automated analysis:

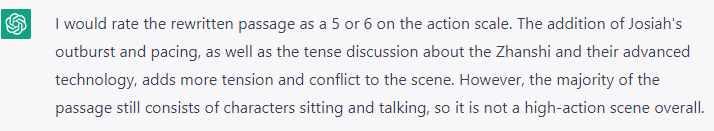

Action: 4 – There is some movement and exploration, but not much actual action.

Emotional Impact: 2 – There isn’t much emotional depth or impact on the reader in this passage.

Clarity: 8 – The author does a good job of conveying the setting and the characters’ actions and reactions.

Content: 4 – While there is some story development, it doesn’t feel like much is happening in this passage.

Pacing: 6 – The pacing is steady, not too slow or too fast, but could benefit from a bit more action or momentum.

Characterization: 6 – While the characters have some unique traits, they don’t feel fully formed or developed yet.

Dialogue: 5 – The dialogue feels natural, but could benefit from more depth or character development.

Style: 7 – The writing style is clear and engaging, but isn’t particularly unique or memorable.

Mechanics: 8 – There are no grammatical errors noted.

Quality: 6 – While the writing is competent, there’s room for improvement in terms of storytelling and emotional depth.

Suggestions for Improvement: The story could benefit from more action and momentum, as well as more emotional depth and character development. There’s potential for a unique and engaging story, but it needs more development and polish.

While this may not seem very detailed, it becomes that way if you are able to ask for an analysis of each element (e.g., pacing, mechanics, content) and then put it all together at the end. In other words, instead of 1 request, you make 11.

So what does that look like? Well, if you want to see a more detailed analysis, Click Here.

All of this programming, debugging, and tearing my hair out led to the following:

Meet BetaBot

(Acutally, BetaBot was a name suggested to me by ChatGPT after finishing the program, so there’s that. The robot wanted to call itself “NovelGenius” but I’m pretty sure that was an egotistical move on its part. Since I’m essentially using AI as an beta reader, the first name fit better. You don’t want to know the other names it came up with.)

BetaBot is really an Excel spreadsheet with several macros built into it to perform some exceedingly repetitive tasks through OpenAI’s API (application programming interface). In order to use BetaBot, you have to sign up to receive your own API key, but it’s painless.

Note: Given the massive interest in ChatGPT and its tendency to get overloaded for free users, I would recommend signing up for ChatGPT Plus so that wait times are eliminated. It’s not that expensive (and much cheaper than some beta reader’s I’ve hired in the past). The API also cost a bit of money. While there is a free period, you may run out of this quickly. As of April 2023, it currently costs $0.002 per 1000 tokens. While not getting into what a token is, this basically equates to about $0.08 for the maximum amount of data sent and returned in a single request.

Here are the basic requirements:

- An Internet connection

- A ChatGPT API Key (required)

- Microsoft Excel (required)

- Microsoft Word (optional, but you probably have it if you have Excel)

- Scrivener (optional, and very useful to break apart your book into manageable chunks)

- A book (or block of text)

That’s pretty much it. The time it takes BetaBot to read your book is dependent on the length itself and your desired level of detail, but I can say you’ll get a response for a 2500-word piece in about 30 seconds with a “basic” analysis. That might be 2 minutes for a more detailed one. Multiply that for a 100,000 word book, and you might be looking at maybe 20 minutes or 1 hour 20 minutes for a highly detailed return.

Really, that’s not bad. I’m still waiting on a human beta reader after a month.

How it works is simple. (This was all built on a PC, so I have no clue what this would do on an Apple computer. Sorry.)

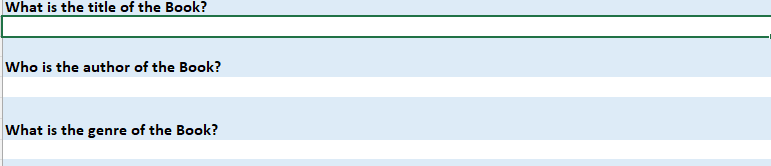

Setup

After the API has been entered, a human (you) would type in the name of the book and the author (probably you). They would then select the genre they think their book fits into.

Input

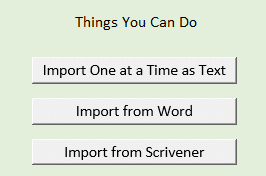

You input a block of text through a (very) simple text editor, or a Word or Scrivener input tool.

(These are macros that walk you through the painless process.)

If your book is long, it may take a minute. There are some initial things you’ll want to do in Scrivener if you want a more robust analysis. For example, break up your book into sections (as pictured below).

If your chapter is 5,000 words or more, for example, you probably already have it broken down into different sections (e.g., a # or — between them) within a single document. Break these into separate documents. That way the imported text will definitely be within BetaBot’s limits. If you don’t, only the first 2,500 words or so of your chapter will be analyzed and you could end up with different results.

Importing from Word is a little different. Rather than break up your book by headings (which, I’ve heard, is not a common way for writers to write), the import tool separates your text into 2,500-word chunks. It’s not perfect, but the results are still pretty good.

Analyze

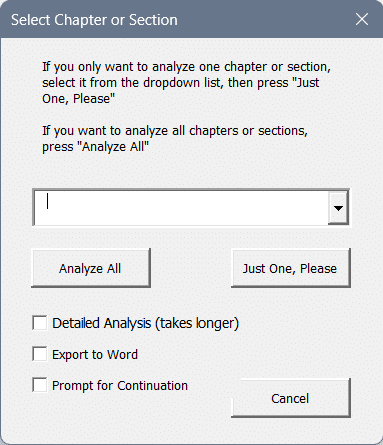

After you have your text imported, you simply click on the “Analyze One or All” button. When you do, you’ll be given some choices.

You can analyze all of your text at once with the “Analyze All” button or just one passage at a time. For your first go ’round, I recommend running just one passage. Simply select it from a dropdown list provided and press “Just One, Please.”

Checking “Detailed Analysis (takes longer)” will give you a much (much) more detailed analysis of all ten elements in the passage plus suggestions for improvement, but it will take much (much) longer (as it says). Still, it’s worth it for those really troublesome passages.

If you check “Export to Word,” the analysis will open up the Export Tool at the end. (See Export the Report below)

“Prompt for Continuation” is unchecked by default, but if you check it, you’ll be prompted after each section analysis if you want to continue on to the next one (assuming you pressed the “Analyze All” button). Without it checked, you have to wait for the whole analysis to complete before doing anything else. (You could, of course, make a sandwich, exercise, or meditate.)

Generating Genre-Specific Rubric

You can also generate a rubric of your particular genre. They are different, and it might be helpful to know what AI is thinking. While there is a generic rubric provided for you as a tab on in the workbook, BetaBot will analyze your text based on the genre you selected.

For example, in a thriller, a high rating for action and pacing may be more important than in literary fiction. A high rating for clarity may also be more important in a thriller where the plot is more complex and requires clear communication to maintain suspense.

On the other hand, in literary fiction, a high rating for characterization, dialogue, and style may be more important since the focus is often on exploring complex characters and themes. The emotional impact may also be more important since literary fiction often aims to evoke deep emotions and connect with readers on a personal level.

Therefore, the importance of each category and the corresponding ratings may vary depending on the genre and the specific goals and priorities of the work.

Export the Report

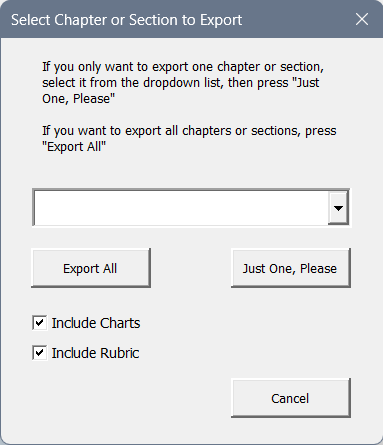

Once the analysis (or beta read) is done, export to Word by clicking the button “Export Analysis to Word” (if you didn’t select it the first time).

Here, you can uncheck “Include Charts” if you don’t want them (it’s checked by default).

You can also uncheck “Include Rubric” (which is, by default, checked). If you haven’t generated a rubric for your chosen genre, BetaBot will export the generic rubric for you. If you have generated one, you’ll get that instead.

Click on “Export All” to export the entire analysis. this will open up a Word document with your analysis. You will need to save it with your own name when the export is complete.

If you just want to export one section, you’ll be given that same dropdown list. Simply pick the section and click on “Just One, Please.” Note that charts are not exported when selecting only one section, regardless of your choice. The rubric, however, can be included if you want it.

Some Caveats (because there have to be some, right?)

While an intellectual-property guarantee is guaranteed (BetaBot was written using a chat model which retains no memory of the questions asked), there are some restrictions on what can be analyzed. To see a list, review https://openai.com/policies/usage-policies

What you know and I know is that sometimes our fictional work can fall into these categories. In that case, BetaBot will return an error. Humans will have to intervene at that point.

In addition, it should be noted that OpenAI states that “As of March 1st, 2023, we retain your API data for 30 days but no longer use your data sent via the API to improve our models.” That means that your work is not being used to train their own language models. This has been a concern for many creatives since the launch of ChatGPT and other AI generators (e.g., for images or audio).

The Next Steps

While this is still in development, there are a few things I want to work on:

- keep the analysis to a single chat thread so that context is retained

- compare BetaBot’s review to a sampling of human reviewers using the same criteria; this might take some time, but it’ll be good to know if there are major differences

- adjust the “temperature” of the request to be either more random or more deterministic and then compare the results

- clean the code up so it doesn’t look like a first-grader wrote it (because, you know, first graders today can program)